Cybercriminals are increasingly leveraging artificial intelligence, or AI, to create more convincing phishing schemes. Traditionally, phishing emails were easier to identify due to red flags such as poor grammar, odd formatting, or misspelled words. However, with AI, those obvious clues are becoming less common, making it crucial for public and affordable housing agencies to remain vigilant.

We spoke with Michael Konopka, Information Security Manager at HAI Group, who shared key insights to help your agency defend against evolving phishing threats:

"These AI-enhanced phishing attacks will become more common and more accurate," Konopka said. "A multi-layer defense strategy that combines people, processes, and technology is essential to counter these increasingly sophisticated threats."

Characteristics of AI-Powered Cyberattacks

AI-powered cyberattacks have several unique characteristics that make them particularly dangerous, according to cybersecurity firm CrowdStrike.

- Attack automation: Cybercriminals can now automate much of the attack process, drastically reducing the need for manual effort and allowing for quicker, large-scale attacks.

- Efficient data gathering: AI accelerates the reconnaissance phase of cyberattacks by automating target research, identifying vulnerabilities, and improving the accuracy of analysis.

- Customization: AI can scrape public data from websites and social media to create highly personalized and convincing phishing messages.

- Reinforcement learning: Malicious AI algorithms continuously adapt to improve attack techniques and evade detection.

- Employee targeting: AI can identify high-value targets within an organization who may have access to sensitive information or appear to have lower technological aptitude.

Phishing Email Examples: Pre-AI vs. AI-Generated

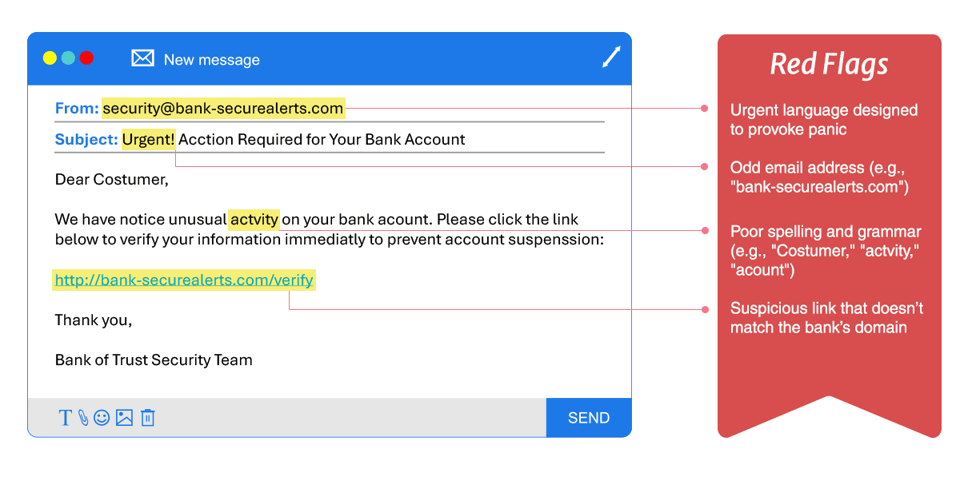

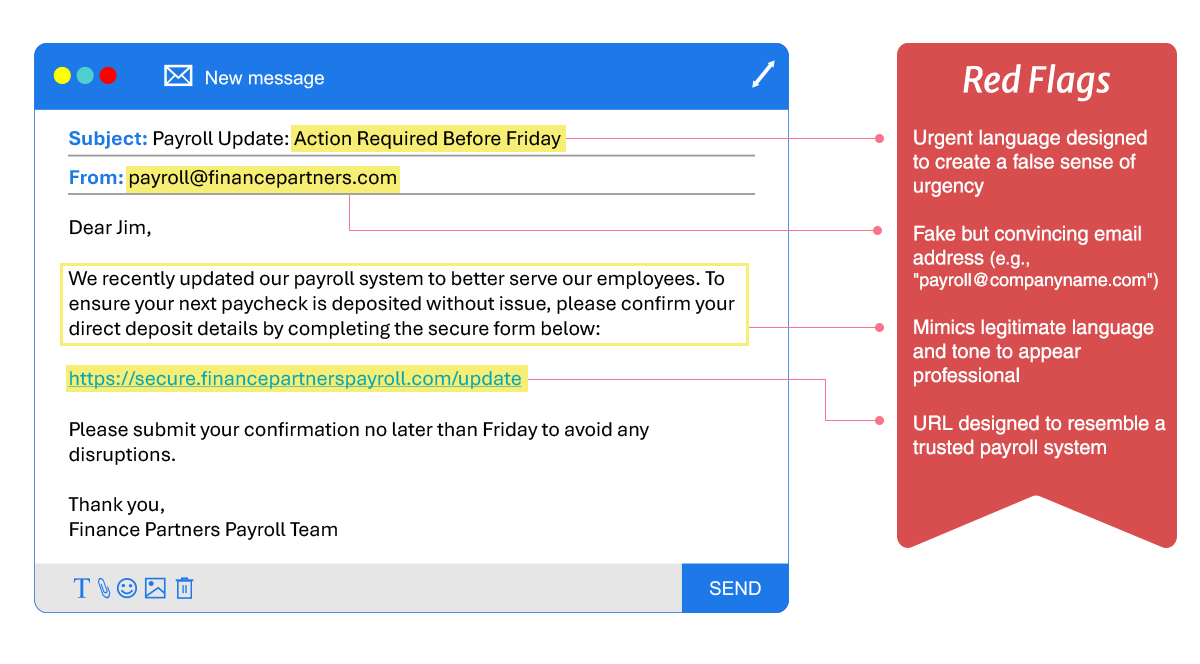

To illustrate the evolution of phishing attacks, here are two sample emails — one with common pre-AI red flags and one enhanced by AI technology:

Pre-AI Phishing Email Example

AI-Generated Phishing Email Example

Konopka explained that while this AI-generated email still has some telltale signs of a phishing attempt, it's far more polished and professional than older scams.

"This makes it more likely to fool someone who is busy and not thinking twice before clicking or opening links," he said.

Konopka warns that email threads fabricated with the help of AI are particularly dangerous because they exploit familiarity and trust.

"When an email appears to be part of an existing conversation, people are less likely to question its authenticity," he explained. "Attackers know this and are using AI to craft convincing email chains that manipulate employees into acting without hesitation."

Fabricated Email Thread Example

How This Scam Works: In this example, the scammer targets Taylor Monroe (a fake person created for this example), who is responsible for processing payments. The scammer impersonates Chris Palmer, a fabricated colleague from Finance Partners' accounting team. To add credibility, they include a fake earlier email from Elliot Rogers, as allegedly from the company's CEO. This staged conversation creates a sense of legitimacy and urgency, making Taylor more likely to comply with the fraudulent payment request.

Earlier Fabricated Emails in Thread:

Stay Alert: Red Flags Are Changing

While traditional phishing indicators like misspellings and awkward language are less frequent due to AI's ability to generate professional-sounding content, phishing attempts still exhibit warning signs. Be wary of:

- Unexpected emails requesting urgent action.

- Unfamiliar senders posing as trusted contacts.

- Fabricated email threads that appear to show ongoing conversations but are entirely false and generated by AI.

- Links that seem legitimate but redirect to suspicious domains.

- Requests for sensitive information or unusual payment instructions.

Fight AI with AI

Modern email filtering technologies can help counteract AI-driven phishing attempts. These tools use advanced algorithms to analyze incoming messages, identify suspicious patterns, and block potential threats before they reach inboxes. Agencies should invest in email security solutions that leverage AI for added protection.

Reinforce Security Awareness Training

"Training your staff remains a critical defense," Konopka emphasized.

Strongly encourage employees to "think before you click." The digital age we live in further drives the expectation for quick responses. Additionally, phishing attempts often manipulate emotions to provoke impulsive decisions. Teaching staff to pause, assess, and verify suspicious messages can significantly reduce your agency's risk.

Free Cybersecurity Training for Policyholders

HAI Group Online Training is pleased to offer 13 on-demand cybersecurity training courses, available at no cost to HAI Group policyholders and their employees.

How to Access the Courses:

- Log in to HAI Group Online Training. If you or your employees don’t have an account, visit the platform and click the sign-up option for users without an account.

- Once an account is created, access to the cybersecurity courses will be granted within two business days.

For questions or issues accessing the courses, please contact onlinetraining@haigroup.com.

Empower Users with Reporting Tools

Consider providing your staff with tools to easily report suspicious emails. Options include:

- Phish Alert Button (for Outlook users)

- Report Message/Report Phishing (available in Microsoft email services)

- Report Phishing (accessible in Gmail under the "More" menu)

These tools enable employees to flag potential threats for further analysis, improving your agency's ability to identify and mitigate phishing campaigns.

Establish an AI Usage Policy

AI can be a powerful tool, but establishing clear guidelines is essential. Konopka recommends the following when developing your agency's AI policy:

- Set realistic expectations. Some AI use cases are common and practical, while others may pose greater risks.

- Prohibit sharing sensitive data with public AI platforms to avoid unintended exposure.

- Conduct due diligence before using AI tools, verifying how data is handled and assessing the platform's trustworthiness.

Define and Enforce Secure Processes

To safeguard against fraud and data exposure, your agency should implement processes that require human oversight. Examples include:

- Two-person validation for fund transfers or sensitive data changes.

- Form completion and authorization protocols for payroll updates, ACH transfers, and similar transactions.

By combining technology, training, and secure processes, your agency can stay ahead of AI-driven phishing schemes and protect its valuable data and digital resources.

For additional free cybersecurity resources, visit HAI Group's Resource Center. To explore cyber insurance coverage options, contact your HAI Group account executive.

This article is for general information only. HAI Group® makes no representation or warranty about the accuracy or applicability of this information for any particular use or circumstance. Your use of this information is at your own discretion and risk. HAI Group® and any author or contributor identified herein assume no responsibility for your use of this information. You should consult with your attorney or subject matter advisor before adopting any risk management strategy or policy.

HAI Group® is a marketing name used to refer to insurers, a producer, and related service providers affiliated through a common mission, management, and governance. Property-casualty insurance and related services are written or provided by Housing Authority Property Insurance, A Mutual Company; Housing Enterprise Insurance Company, Inc.; Housing Specialty Insurance Company, Inc.; Housing Investment Group, Inc.; and Housing Insurance Services (DBA Housing Insurance Agency Services in NY and MI).

.png)